NOTE: If you opt to select a different IDE other than Eclipse, make sure it supports both Scala and Java syntaxes.

#Install apache spark on mapr install#

Install the Scala Syntax extension from Visual Studio Code to install Scala. Install Visual Studio Code for your environment for developing. Developers bypass the added expense of paying fees for subscriptions or licenses. The framework for Spark uses Scala and Hadoop is based on it. This direction enables it to outperform Java.

With Scala, functionality is preferred over all paradigms in coding that are object-oriented. There are benefits to using Scala’s framework. However, the steps given here for setting up Hadoop Spark Scala don’t apply to Windows OS systems. For example, if you use Linux or a macOS X or similar, these instructions will work for you. NOTE: Proceed with this tutorial if your operating system is UNIX-based.

#Install apache spark on mapr how to#

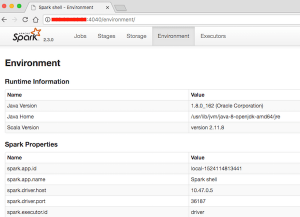

This tutorial will explain the steps on how to setup the big three essentials for large dataset processing: Hadoop Spark Scala. cmd> winutils.exe chmod -R 777 D:tmphive Check the permissions. Then you started using the option Run as administrator. Hive Permissions Bug: Create the folder D:tmphive Execute the following command in cmd. Apache Spark, which is written on Scala, has high-performance large dataset processing proficiency. It fixed some bugs I had after installing Spark. Object-oriented Scalable Language or Scala is a functional, statically typed programming language that runs on the Java Virtual Machine (JVM). Log into master node as user hadoop to install. Make sure your cluster is not accessible from the outside world. After downloading the spark, unpack the distribution in a directory.

By default a spark cluster has no security. Download Apache Spark distribution After installing Java 8 in step 1, download Spark from and choose Pre-built for Apache Hadoop 2.7 and later as mentioned in below picture. The master coordinates the distribution of work and the workers, well they do the actual work. Next, input the command tar -xvf and then the path of the archive you downloaded along with the filename. Spark knows two roles for your machines: Master Node and Worker Node. This will start name node in master node as well as data node in all of the workers nodes. before starting the configuration first need to format namenode. Large dataset processing requires a reliable way to handle and distribute heavy workloads fast and easy application building. Linux users, Apache has archived version of Hadoop for your type of OS. it’s time to start the services of hdfs and yarn. Because Hadoop is Java-built, it seamlessly harmonizes with simplistic programming models and this results in providing a vast amount of scaling capabilities.Īpache Spark is the analytics engine that powers Hadoop. Its distributed file system called HDFS is at the heart of Hadoop. The open-source Apache Hadoop framework is used to process huge datasets across cluster nodes.

0 kommentar(er)

0 kommentar(er)